Let’s face it head-on: AI makes a lot of people uneasy.

That’s especially true when it involves their health. Don’t get us wrong; at Healthee, we love and embrace AI as a tool to make navigating a complex healthcare space easier. However, we understand that it’s one thing to let a chatbot recommend a playlist. It’s another to trust it with your medical history or health benefits. That discomfort isn’t irrational. Healthcare is deeply personal, and people have every right to ask how their data is being used, stored, and protected.

In healthcare spaces, trust doesn’t come from flashy features or big promises. It comes from transparency. Companies using AI in healthcare need to be upfront about what their technology does, what it doesn’t do, and how data flows through their system. Questions like “Are you HIPAA-compliant?”, “Do you train your models on PHI?”, and “Can employees opt out?” aren’t just due diligence but rather the foundation of trust.

Beyond answering those questions, companies also have to build for safety, not just compliance. That means optimizing data storage, using real-time integrations, and making sure users have control over their experience. People don’t want to be told to relax. They’d much rather see that someone has actually thought this through.

Common Data Security Questions to Ask AI Vendors

AI has the potential to positively transform healthcare (and many other industries), but with that potential comes a critical challenge: earning trust.

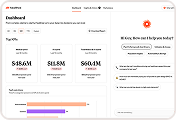

As AI adoption accelerates, so does scrutiny from enterprise stakeholders. HR leaders love streamlined, automated processes. Employees love the simplicity. But IT, legal, and InfoSec teams? You need proof. You need to know that a new platform won’t introduce new risks. And you’re right to ask.

It’s common to ask tough questions of potential AI vendors, including:

- Are you HIPAA-compliant? The answer should always be a resounding, “Yes!” Without a fully HIPAA-compliant platform with strict encryption standards, audit trails, and access controls that protect personal health information (PHI), your people’s sensitive information could be at risk.

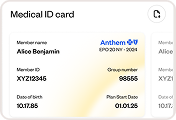

- Do you train your AI on employee health data? Answers will vary, but if you want to maximize security, the answer should be no. For example, Healthee’s AI-powered personal health assistant, Zoe, never uses PHI or PII to train or refine our AI models. In some instances, we only use de-identified interactions to improve our product, however, this is only done in a secure and HIPAA-compliant ecosystem.

- Are your systems SOC 2 certified? Again, if you want the best for your people, this should be an easy yes. Being SOC 2 Type II certified means a vendor’s security, availability, and confidentiality practices have been independently audited and verified by an expert, neutral third party.

- How is personal information handled and secured? As you would expect with any SaaS vendor, data and personal information must be stored somewhere. But the location of this info is vital to know. You should look for a secure-by-design approach. In practice, this means all data is encrypted at rest and in transit, with access strictly limited to authorized personnel under role-based policies.

These questions are just the beginning, though. To truly evaluate the security and reliability of any AI vendor, CISOs and InfoSec teams need to go deeper, asking the right technical and operational questions to assess risk, data handling practices, and long-term trust.

Now, let’s dive deeper and learn more technical AI data security questions to keep in your back pocket.

Deeper Dive: What Data Teams Are Asking AI Vendors

If you’re on an InfoSec team or working as a CISO, your job is to think ahead. When someone brings in a new AI tool, you’re not just looking for the features. You’re asking if this thing could be a risk. Is it secure? Is it handling data the right way? Can we trust it?

5 technical data security questions to ask vendors

Here are a few of the more technical questions that are important to ask, and the answers you should expect:

- How do you balance improving your AI experience to provide employees value while protecting their data? AI vendors with your best interests at heart will be honest about how they use your people’s data. For example, Zoe, Healthee’s AI assistant, never learns from conversations using personal health info. We don’t use PHI or PII to train anything and maintain our HIPAA compliance and SOC 2 Type II certification through strict data security protocols. However, to continuously improve the AI experience and deliver a top-notch product, we use de-identified user interactions to help train our models to better serve users each time they return to the app.

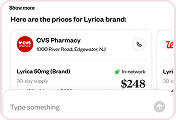

- What data do you actually store, and how long do you keep it? Data storage, transfer, maintenance, and removal is core to SaaS hygiene and security. If your vendor relies on data from outside sources, they should pull from real-time, secure APIs and maintain a clear filter for when data is essential to store and when it’s not.

- Can users opt out or request their data be deleted? Vendors should be flexible and know that each employee will have a different experience with their app. Building flexibility into the core platform equips employers with peace of mind knowing employees can have their data deleted. If someone wants their data gone, your AI vendor should take care of it.

- Who at your organization can see sensitive data? Only a small number of authorized team members should have access to sensitive information, and only when it’s required to support a current action. Access must be role-based and logged. Always.

- Do you do regular security testing? You better hope they do! Bad actors constantly evolve their tactics, so your AI vendor should always be shifting in response. Internal and third-party security reviews should be built into their operating model, with routine audits and penetration testing to keep our systems tight and up to date.

These prompts help separate the vendors who just say they’re secure from the ones who actually are.

Not sure if you’re asking all the right questions? The Healthee team is always happy to walk through the details and show you exactly how we keep data safe. Every AI vendor should be willing and able to answer data security questions like these — if they don’t, you might be looking in the wrong place.

Beyond the Basics: More Questions to Ask During the RFP Process

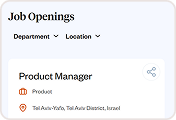

The RFP (Request for Proposal) process isn’t just about comparing features. It’s your moment to assess risk, understand tradeoffs, and uncover how a vendor truly operates behind the scenes. With AI, that means asking a new layer of questions, ones that go beyond HIPAA checkboxes or platform demos.

That’s why the RFP is the perfect time to get detailed. You’re not just buying a product. You’re trusting a vendor to handle sensitive health information, deliver accurate support, and hold up under scrutiny from employees, security teams, and regulators alike.

We may be in the new frontier of AI, and it’s important to ask about more than compliance. Dig into data lineage, decision transparency, and model accountability to get a clearer picture. Vendors should be able to articulate where their AI models source data, how often those models are updated, and what governance is in place to detect bias or misinformation. Ask how their systems explain decisions made by AI tools, especially when those decisions affect healthcare navigation or benefits eligibility. You’ll want to understand whether they have human oversight, how they manage flagged issues, and what logs are available for audits. If they can’t answer these questions clearly, that’s a red flag … especially when your employees’ health and trust are on the line.

The Bottom Line: AI Can Be Data-Secure, Transparent, and Transformational

AI is changing how people manage their health, but trust doesn’t come automatically. It starts with asking the right questions.

These key data security questions HR, IT, and InfoSec leaders need to ask AI vendors must be hammered out before signing the dotted line. When health data is involved, compliance isn’t enough. Transparency, strong safeguards, and clear answers are what matter. At Healthee, we welcome those questions. We’re not afraid to show we’re serious about security.

Want to talk more about how Healthee is using AI to transform employee benefits while keeping data security airtight? Book a demo with us below!