Artificial intelligence (AI) is rapidly transforming the healthcare and benefits landscape. From streamlining administrative tasks to delivering personalized care recommendations, AI is already reshaping how employers manage health benefits. But as this technology evolves, so do the challenges around data privacy and compliance.

For HR leaders, understanding the intersection of AI and the Health Insurance Portability and Accountability Act (HIPAA) is critical. HIPAA remains the primary safeguard protecting sensitive health data, yet many AI tools in the market fall short of meeting its standards.

In this blog, we’ll break down what HIPAA compliance means in the context of AI-powered health tech. You’ll learn how AI introduces new risks around data privacy, what to look for in HIPAA-compliant digital tools, and how platforms like Healthee build data protection into every layer of the user experience.

What is HIPAA? A Brief Overview

HIPAA, or the Health Insurance Portability and Accountability Act of 1996, is a federal law that sets national standards for protecting sensitive patient health information. For HR leaders, understanding HIPAA is essential not just for compliance, but also for building trust with employees who rely on employer-sponsored health plans.

What Does HIPAA Cover?

HIPAA, short for the Health Insurance Portability and Accountability Act, applies to any organization that handles protected health information (PHI). That includes employer-sponsored health plans, health insurance carriers, and third-party administrators (TPAs). Most HR departments fall under HIPAA either as “covered entities” or “business associates,” especially if they’re managing or accessing PHI on behalf of employees.

The law is structured around three core rules:

- The Privacy Rule defines how PHI can be used and disclosed. It grants employees rights over their health data and limits who can access that information.

- The Security Rule focuses on electronic PHI (ePHI), requiring companies to implement administrative, physical, and technical safeguards to protect data integrity, confidentiality, and availability.

- The Breach Notification Rule mandates that individuals, the Department of Health and Human Services (HHS), and sometimes the media must be notified when a data breach involving unsecured PHI occurs.

But HIPAA isn’t the whole picture. Many states have additional privacy laws that layer on stricter requirements. For example, California’s Confidentiality of Medical Information Act (CMIA) expands patient protections beyond HIPAA standards. That means employers must stay vigilant, not just federally, but locally too.

Why HIPAA Matters in HR

Many HR teams access or transfer ePHI or personally identifiable information (PII) when coordinating employee benefits, handling disability claims, or managing wellness programs. With more data flowing through digital platforms than ever before, HR leaders must ensure every system they use, from open enrollment software to AI-powered health assistants, complies with HIPAA’s security standards.

Ignoring HIPAA requirements can result in significant penalties. In 2023 alone, the HHS Office for Civil Rights resolved over a dozen HIPAA enforcement actions with fines ranging from $25,000 to over $1 million per violation1.

HIPAA is not just a box to check. It is a foundation for protecting employee trust, avoiding legal risk, and ensuring the responsible use of health data in an increasingly digital ecosystem.

The Intersection of AI and HIPAA Security

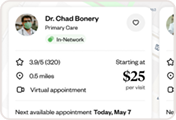

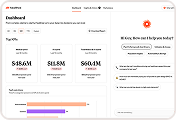

AI is making health benefits faster, smarter, and more personalized. From automating open enrollment to recommending in-network providers, AI can reduce administrative burden and improve the employee experience. But as powerful as AI is, it introduces new challenges that HR leaders must address, particularly when it comes to HIPAA and AI data security.

How AI Is Used in Healthcare Benefits

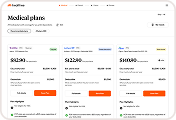

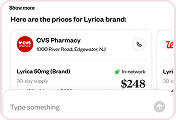

AI-powered platforms like Healthee use machine learning and natural language processing to recommend personalized health plans, automate cost and care navigation, analyze historical claims data and much, much more.

This level of functionality requires access to a high volume of sensitive data, including past claims, coverage details, and personal medical history. That is why HIPAA compliance is essential for any health tech platform using AI.

HIPAA Security Risks Introduced by AI

AI has the power to streamline healthcare benefits, but it also introduces new compliance challenges. For HR leaders, understanding these risks is essential to ensuring that the tools they adopt are HIPAA-secure and employee-safe.

Large-Scale Data Usage

AI models depend on massive datasets to function effectively. In healthcare, this often includes claims data, treatment histories, and insurance plan information — much of which qualifies as protected health information (PHI). If this data is not properly de-identified or encrypted, it can pose a serious risk to HIPAA compliance. Organizations using AI must confirm that all health data used for training or real-time insights is processed in accordance with HIPAA’s Privacy and Security Rules.

Automated Decision-Making

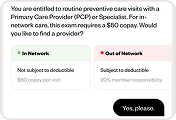

AI-driven tools are becoming essential in modern benefits strategies, offering personalized guidance on plan selection, care navigation, and provider recommendations. These tools boost efficiency and empower employees to make more informed choices. But with this innovation comes responsibility.

Employers need to ensure that any automated recommendations are explainable, compliant, and equitable. That’s why it’s critical to vet your benefits technology partner carefully. If decision-making logic isn’t transparent — or if access to care is influenced without clarity — it can raise compliance flags or create unintended consequences.

That’s where trusted partners like Healthee come in. Healthee’s AI-powered features, like Plan Comparison Tool and Health Assistant, are built with transparency at the core. We prioritize explainability, data security, and inclusion, so your employees get smart, unbiased support, and you get peace of mind knowing it’s backed by a partner who understands the stakes.

Third-Party Integrations

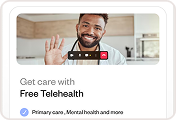

Many AI platforms rely on third-party services to deliver real-time benefits support — including insurance APIs, provider directories, or telehealth platforms. Each integration introduces a new access point to employee health data. Under HIPAA, these endpoints must be secured with technical safeguards such as access controls, encryption, and audit capabilities. Without proper security in place, these connections can become vulnerable entryways for data breaches or unauthorized access.

Regulatory Considerations for AI Tools

HIPAA doesn’t directly mention artificial intelligence—but that doesn’t mean AI gets a free pass. If you’re using a platform that handles protected health information (PHI) with the help of AI, all HIPAA requirements still apply.

Covered entities and business associates must ensure that the use of AI upholds key HIPAA safeguards, including:

- Access controls to restrict who can view or modify PHI

- Audit trails that log how and when data is accessed or used

- Encryption protocols for data both in transit and at rest

- Ongoing risk assessments to identify vulnerabilities introduced by AI models or processes

The National Institute of Standards and Technology (NIST) emphasizes that transparency and explainability are essential to maintaining trust in AI systems, especially in sensitive areas like healthcare. Their AI Risk Management Framework highlights that “inscrutable AI systems can complicate risk measurement,” underscoring the need for clear documentation and understanding of AI decision-making processes.2

For HR leaders, this means asking deeper questions: not just whether a platform claims HIPAA compliance, but how its AI systems demonstrate that compliance every day, at scale. When in doubt, choose a partner that can show their work.

Healthee’s Approach to HIPAA-Compliant AI Integration

Not all AI-powered health platforms are built with privacy and compliance at their core. At Healthee, HIPAA compliance is foundational, not a feature added after the fact. Our platform was designed from the ground up to ensure that every AI-driven interaction protects sensitive health data and empowers employees to navigate their benefits securely.

How Zoe Supports HIPAA Security

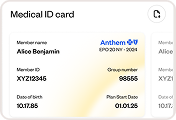

Zoe, Healthee’s AI-powered personal health assistant, offers 24/7 access to personalized care and cost information. From answering coverage questions to guiding plan comparisons during open enrollment, Zoe provides support that is fast, accurate, and HIPAA-compliant. Unlike general-purpose chatbots, Zoe is built with healthcare-specific applications in mind and designed to handle PHI securely while delivering real-time, relevant insights.

Zoe does not operate in a vacuum. Every interaction is protected by multiple layers of security, including encryption, secure authentication, and strict role-based access. This ensures that only authorized users (and no one else) can access health data. And since Zoe supports plan comparison and provider navigation, employees can make smarter healthcare decisions without ever compromising their personal data.

Built-In Safeguards That Go Beyond Compliance

All third-party integrations, including those with insurers and provider directories, are secured through encrypted APIs. These connections are constantly monitored for anomalies or threats, and our platform is SOC 2 Type II certified, providing additional peace of mind for HR and IT teams.

Why Healthee’s Model Works

What makes Healthee different is how we blend user-friendly AI with enterprise-grade compliance. Many AI platforms force HR leaders to choose between innovation and risk — we believe you should never have to choose. Healthee’s HIPAA-compliant AI tools offer both a seamless employee experience and a rock-solid privacy foundation.

Best Practices for Evaluating AI Vendors for HIPAA Compliance

If a platform will be handling protected health information (PHI), it must demonstrate a clear and proactive commitment to HIPAA compliance. HR and benefits leaders play a critical role in making that call, and asking the right questions during the procurement process is key.

Start With a Risk Assessment

Before selecting any AI vendor, conduct a comprehensive risk assessment. Understand how the platform collects, stores, and transmits employee health data. Ask if the vendor performs their own third-party risk assessments, how frequently they do so, and whether the results are available for review.

Look for vendors that follow a “privacy by design” model. This means HIPAA security isn’t just layered on at the end but is foundational to how the platform operates.

Ask About Data De-Identification

AI systems thrive on data, but that data does not have to include personal identifiers. Ensure that your vendor uses de-identified or anonymized datasets for training their AI. Under HIPAA, there are strict requirements for how data must be de-identified, including the removal of 18 types of identifiers such as names, addresses, and Social Security numbers.

If a vendor uses real-world PHI in any part of their system, they should be able to clearly explain how it’s protected and whether encryption is applied at rest and in transit.

Evaluate Technical Safeguards

Encryption, access control, and audit logging aren’t optional — they are fundamental requirements for HIPAA-compliant systems. Ask your vendor how they handle user authentication, who can access employee data, and whether they maintain a full audit trail of system interactions. A mature AI platform should offer detailed activity logs and system alerts in the event of unauthorized access attempts.

You should also confirm that the platform can integrate securely with other systems you use. Every integration point — whether it’s an API with an insurance carrier or a sync with a payroll provider — needs to meet HIPAA’s technical standards.

Confirm Internal Training and Support

HIPAA compliance isn’t just about systems — it’s also about people. Ask whether the vendor’s team receives HIPAA training and how they respond to incidents involving data. A strong partner will have dedicated compliance leads and be able to provide support documentation if a breach ever occurs.

If you’re unsure what questions to ask, Healthee’s implementation team offers support for evaluating vendor compliance and shares key questions HR teams should bring to every tech conversation.

When HIPPA Meets AI

As AI becomes a core part of healthcare and benefits delivery, HIPAA compliance must remain front and center. The rise of intelligent systems can improve the employee experience, lower costs, and simplify HR workloads — but only if those systems are built on a foundation of data security and regulatory integrity.

For HR leaders, choosing the right AI-powered benefits platform means looking beyond bells and whistles. It means asking tough questions about data protection, transparency, and risk. Platforms like Healthee are designed to meet these challenges head-on by embedding HIPAA security into every layer of the user experience, from Zoe’s AI conversations to real-time claims data integration.

If you’re exploring ways to deliver smarter, safer benefits, start by choosing tools that respect employee privacy and meet the highest standards for security. Your people deserve technology they can trust, and so does your HR team.

Disclaimer: This blog is intended for informational and educational purposes only and does not constitute legal advice. For guidance on specific compliance obligations under HIPAA or other applicable laws, organizations should consult with qualified legal counsel specializing in healthcare privacy and data security.

References

1. U.S. Department of Health & Human Services. (2023). HIPAA enforcement highlights. HHS.gov. https://www.hhs.gov/hipaa/for-professionals/compliance-enforcement/data/enforcement-highlights/index.html

2. National Institute of Standards and Technology. (2023). AI Risk Management Framework (NIST AI 100-1). https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf